Accelerate AI Development with AlexHost’s Cutting-Edge GPU Hosting

In the rapidly evolving landscape of artificial intelligence, computational power is paramount. Whether you’re training large language models, deploying computer vision applications, or developing generative AI systems, AlexHost’s GPU hosting solutions provide the robust infrastructure necessary to meet these demands.

Built for Demanding AI Workloads

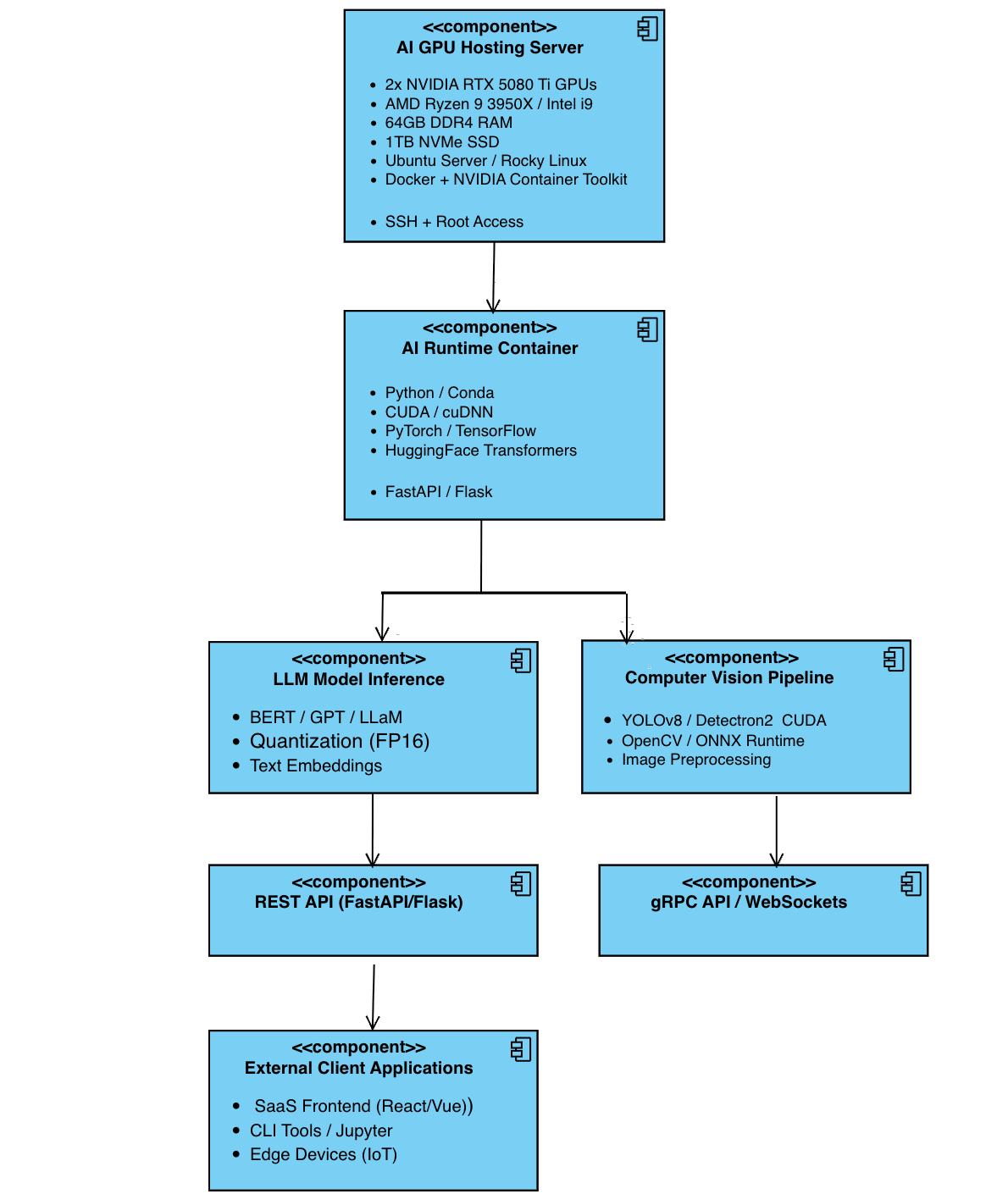

AlexHost’s GPU hosting infrastructure is meticulously designed to support intensive AI workloads, emphasizing deep learning, model training, and large-scale inference. The platform features high-throughput, low-latency architectures powered by 2x NVIDIA GeForce RTX 5080 Ti GPUs, renowned for their CUDA-core density, memory bandwidth, and parallel computing capabilities.

These systems are optimized for advanced AI applications, including:

- Supervised and unsupervised deep learning training requiring sustained FP16/FP32 computation and high VRAM utilization.

- Fine-tuning transformer-based architectures such as LLMs (e.g., BERT, GPT), diffusion models, and GANs, necessitating fast interconnects and substantial memory throughput.

- Natural Language Processing (NLP) tasks like sequence modeling, text classification, and contextual embeddings, leveraging high core parallelism and GPU acceleration.

- Computer Vision applications, including real-time object detection, segmentation, OCR, and multi-frame surveillance analytics.

- Edge AI and Federated Learning scenarios where low-latency GPU processing is crucial for distributed, privacy-preserving learning and on-device inference.

AlexHost’s infrastructure supports pass-through PCIe access and true bare-metal deployments, eliminating virtualization overhead and granting users direct access to the full GPU resource stack. This is critical for scenarios requiring precise hardware tuning, deterministic performance, and compatibility with low-level CUDA or ML framework optimizations (e.g., NCCL, cuDNN, TensorRT).

Configurations that Scale with You

AlexHost offers a modular and performance-tuned GPU server portfolio designed to scale with the evolving demands of AI development. Users can choose from diverse hardware configurations, including:

- 2x NVIDIA GeForce RTX 5080 Ti GPUs: Each with 16GB GDDR6X memory, totaling 32GB VRAM, providing exceptional performance for AI workloads.

- Intel Core i9-7900X: 10 cores, suitable for high-frequency tasks.

- AMD Ryzen™ 9 3950X: 16 cores, offering robust multi-threaded performance.

- Memory: 64GB DDR4 RAM, ensuring ample capacity for complex, multi-threaded AI tasks.

- Storage: 1TB NVMe SSD, providing high-speed data access for large datasets and model checkpoints.

- Network: 1 Gbps bandwidth, facilitating rapid data transfer for distributed training and real-time inference.

- IP Configuration: 1 IPv4/IPv6 address included.

These configurations are ideal for startups building AI-powered SaaS products, research institutions running complex simulations, or enterprises deploying large-scale AI models.

Privacy-First Hosting for AI Innovation — Enhanced

Beyond raw performance and scalability, AlexHost prioritizes data protection, infrastructure isolation, and sovereign compute control — making it an ideal environment for privacy-sensitive AI development and deployment.

Full Data Control & Compliance Isolation

AlexHost delivers bare-metal GPU servers with exclusive hardware allocation, ensuring that:

- No virtualization layers or shared hypervisors can create side-channel leakage risks.

- All memory, GPU, and disk resources are isolated per customer, eliminating noisy-neighbor effects or unauthorized cross-tenant access.

- No shared tenancy — your models, datasets, and runtime environments stay completely under your control.

This infrastructure is particularly suited for:

- Federated Learning and confidential AI training where datasets must remain local to the server.

- Privacy-preserving model fine-tuning using sensitive medical, legal, or financial data.

- Secure on-prem-style cloud computing for enterprise clients needing regulatory independence.

Bare-Metal Architecture for Sensitive AI Development

Our bare-metal GPU hosting ensures:

- Full root access to the host system, with the ability to install hardened Linux kernels, audit logging tools, disk encryption (LUKS), or secure enclaves.

- Native support for low-level optimization libraries (cuDNN, NCCL, TensorRT) with deterministic performance and maximum GPU throughput.

- Ability to deploy air-gapped training nodes, physically isolated from the public internet, via custom firewall/NAT configurations.

AlexHost clients can also bring their own hypervisor stack (e.g., Proxmox, KVM, Xen) to build private clouds atop GPU-capable nodes — giving DevOps and MLOps teams full control of infrastructure and workload scheduling.